Feb

02

2026

A decade of TestingConferences.org

2 min read

Jan

19

2026

Thoughts on Elon Musk (book) by Walter Isaacson

4 min read

Jan

14

2026

Build vs Buy: API Docs

2 min read

Nov

22

2025

Installing Ghost Locally on macOS with hot-reload of templates

3 min read

Nov

16

2025

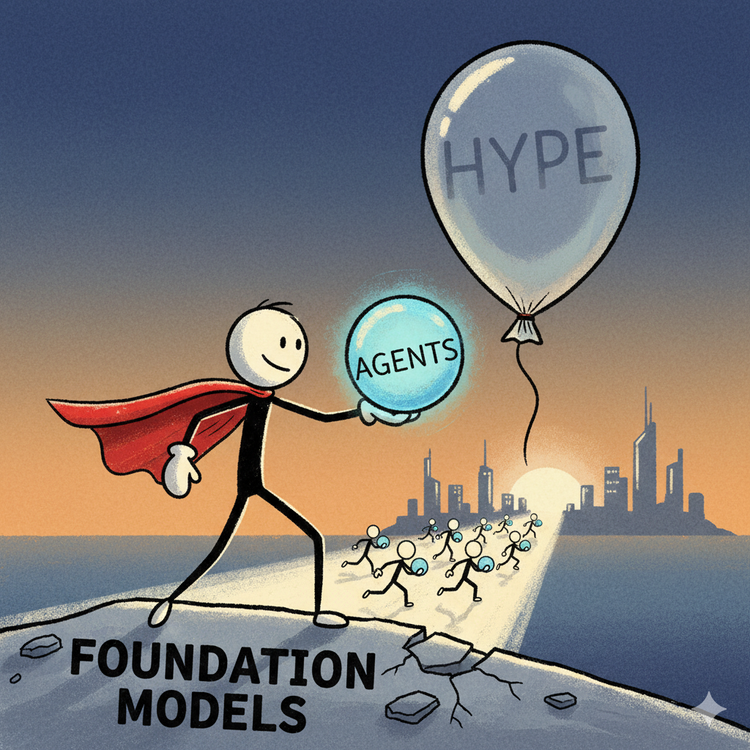

Foundation models and beyond

7 min read

Nov

10

2025

Things I learned at STELLA

3 min read

Sep

29

2025

Should you join a Startup?

3 min read

Sep

05

2025

Developing the Right Test Documentation

4 min read

Aug

15

2025

Shattering Illusions

3 min read

Mar

09

2025

Explainable AI

2 min read

Feb

17

2025

Thoughts on The Worlds I See

5 min read

Feb

07

2025

2024 In Review

4 min read

Jan

14

2025

so long, farewell

2 min read

Apr

11

2024

Push vs Pull Work

5 min read

Mar

16

2024

2023 in Review

4 min read

Nov

28

2023

Observability, Monitoring and Testing

3 min read

Nov

10

2023

Closing the gap between confidence and knowledge

2 min read

Sep

26

2023

Negotiating an offer: An unreasonable number of reasonable requests

3 min read

Aug

28

2023

What's a testing manager?

2 min read

Aug

17

2023

Using ChatGPT to help write scripts

3 min read